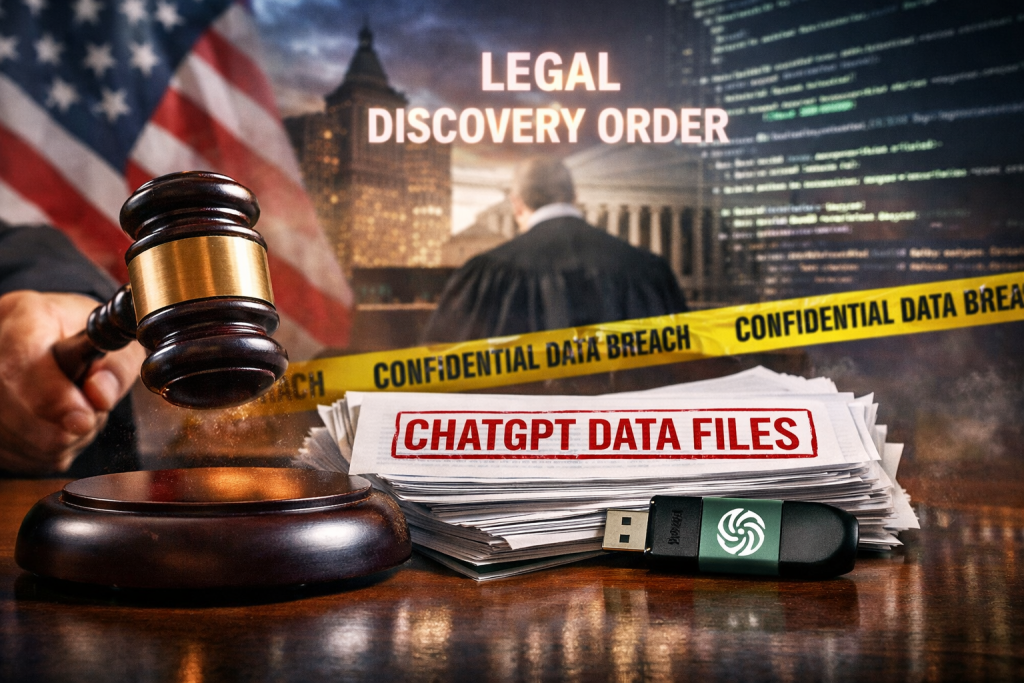

S.D.N.Y. Discovery Breach: OpenAI Compelled to Surrender 20 Million Chat Logs

The Erosion of the Voluntariness Shield

Federal litigation has reached a decisive inflection point as OpenAI fails to insulate its internal user data from hostile discovery. On January 5, 2026, District Judge Sidney H. Stein affirmed a high-stakes mandate requiring the artificial intelligence giant to produce 20 million de-identified ChatGPT logs.

his ruling effectively dismantles the corporate privacy shield that developers have long used to obstruct intellectual property plaintiffs from accessing raw output evidence.

The decision stems from a consolidated multidistrict litigation involving high-profile news organizations, including the New York Times Co. and the Chicago Tribune.

By validating the prior order from Magistrate Judge Ona T. Wang, the court has signaled that the relevance of training data and user interactions outweighs the administrative burden of production. This precedent serves as a warning to the broader Silicon Valley ecosystem that user-submitted queries are no longer a protected sanctuary in federal copyright disputes.

Legal defense strategies previously relied on the theory that user chats were protected under the same privacy expectations as private telecommunications. OpenAI’s legal team, led by Latham & Watkins and Keker, Van Nest & Peters, argued that wholesale disclosure would infringe upon the confidentiality of millions.

However, Judge Stein’s analysis distinguished this matter from traditional wiretap cases, noting that ChatGPT users voluntarily transmit their data to a third-party platform.

This distinction creates a significant chokepoint for AI companies attempting to cite the US Securities and Exchange Commission precedents. Because OpenAI maintains uncontested ownership of the logs, the court found that the subjects of these chats lacked a sufficiently compelling privacy interest to halt discovery. The evidentiary burden has shifted, leaving developers vulnerable to granular analysis of how their models reproduce protected journalistic content.

Strategic Shifts in Discovery Economics

Current market conditions for AI firms are now tethered to the "discoverable" nature of their operational backend. OpenAI’s attempt to mitigate the production burden by offering filtered search results was dismissed as a sub-standard legal strategy for 2026. The Southern District of New York (S.D.N.Y.) has made it clear that the "least burdensome" path is not a statutory right when substantial relevance is proven.

This ruling triggers a massive shift in how LLM providers must account for litigation costs and potential licensing settlements. If 20 million logs reveal a consistent pattern of paywall circumvention or direct copying, the fair use defense for model training becomes commercially untenable for most providers. High-frequency litigation is no longer an edge case but a core operating expense for generative AI firms.

Legal departments must now view data logs as high-velocity liabilities rather than proprietary assets. Every user prompt that results in a copyright-infringing output is now a recorded admission that can be weaponized in open court.

The economic friction here is profound, as it forces companies to choose between preserving data for model improvement and deleting data to limit exposure in future suits.

| Former Status Quo | Strategic Trigger | 2026 Reality |

|---|---|---|

| User logs were shielded by broad “privacy as a defense” arguments. | S.D.N.Y. affirms production of 20 million de-identified chat records. | Voluntarily submitted LLM data is fully discoverable for IP relevance. |

| Platforms controlled the search parameters for discovery samples. | Judge Stein rejects “least burdensome” search-only discovery models. | Plaintiffs gain wholesale access to raw logs for expert reconstruction. |

| AI training methods were treated as opaque “black box” secrets. | Consolidation of 16 suits forces disclosure of output logic. | Operational transparency is now a mandatory litigation cost. |

The Doctrine of Proportionality Reimagined

Under Federal Rule of Civil Procedure 26(b)(1), the court must balance the importance of the discovery against the burden on the defendant. In this case, the court determined that the high valuation of the intellectual property at stake justified the extraordinary production request. The news outlets argue that these logs are the only way to prove that ChatGPT obviates the need for original reporting.

This ruling effectively introduces a form of regulatory cram-down on AI developers who previously operated with minimal oversight of their output data. By forcing the hand of OpenAI, the Southern District of New York has created a roadmap for future plaintiffs in the Authors Guild and visual artist suits. The legal fortress around large language models has been breached by the very users who populate it with data.

Economic leverage is shifting toward content owners who can now demand raw access to the "black box." For years, AI companies claimed that their training processes were too complex to be dissected by traditional legal means. Judge Stein’s order proves that the judiciary is no longer intimidated by the scale of big data, provided the plaintiff’s standing is firm.

Forensic Linguistics and the Re-identification Risk

While the court has mandated de-identification, the information gain for plaintiffs remains immense and strategically transformative for the litigation. Lawyers are increasingly using forensic experts to analyze residual data within the logs that bypasses simple anonymization. Even without names or emails, the syntax and specific queries of 20 million users can reveal how the model was prompted to bypass paywalls.

This creates a second-order risk of "Shadow AI" exposure, where employees may have pasted proprietary code or sensitive strategy documents into the chatbot.

These 20 million logs likely contain sensitive data from employees at other companies, turning a copyright case into a trade secret vulnerability. For OpenAI, the production of these logs is not just a copyright risk, but a potential breach of trust with their most valuable enterprise clients.

The "Stein Standard" established in this case suggests that de-identification is a sufficient safeguard for the court, even if it is not a perfect one for the user. This creates a massive insurance gap for companies that rely on LLMs for internal productivity. If a court can compel the release of 20 million logs today, there is no reason it cannot compel the release of an entire enterprise’s prompt history tomorrow.

Jurisdictional Friction and International Fallout

The 2026 ruling also creates immediate friction with international data regimes, particularly the GDPR and the UK’s Data Protection Act. While Judge Wang’s order includes protective measures and de-identification, the wholesale nature of the transfer could trigger inquiries from European regulators. OpenAI’s attempt to use these international conflicts as a stay of execution was ultimately unsuccessful in the eyes of the Manhattan court.

Counsel for the news plaintiffs have already begun preparing for the analysis that these 20 million logs will provide. Experts suggest that even "anonymized" logs can reveal patterns of systematic infringement when mapped against the training dates of the models. The outcome of this production will likely dictate the settlement terms for the remainder of the consolidated lawsuits throughout the fiscal year.

We are witnessing the start of a "Discovery Arms Race" between tech giants and content publishers. As firms like Susman Godfrey pioneer these data-heavy litigation tactics, other copyright holders will follow suit in various jurisdictions. The S.D.N.Y. has effectively normalized the idea that AI companies must open their server logs to the same scrutiny as a company’s email archives.

The financial stakes of this discovery breach are estimated in the hundreds of millions for the involved media conglomerates. If the 20 million logs show a pattern of users successfully asking ChatGPT to read the New York Times for free, the fair use defense is essentially dead. This would force OpenAI into a massive licensing settlement waterfall, potentially costing the company billions in back-dated royalties.

The market impact extends to Anthropic and other competitors, who are now likely to face similar discovery motions across the globe. If S.D.N.Y. remains the venue of choice for these suits, the "Stein Standard" will become the de facto law of the land. Investors are already pricing in this increased litigation risk, leading to a temporary cooling of the aggressive valuations seen in late 2025.

OpenAI’s Chief Strategy Officer, Jason Kwon, recently called for a new form of "AI Privilege" to protect user-chatbot conversations from subpoenas. The January 5th ruling is a direct rejection of this concept, placing AI interaction on the same level as standard enterprise software. The court has affirmed that until Congress acts to create a specific privilege, AI developers are subject to the same discovery rules as any other software provider.

This leaves the industry in a state of regulatory cram-down, where judicial orders are moving faster than legislative frameworks or executive orders. For GCs, the priority is no longer just winning the case, but managing the massive exposure that comes from the discovery process itself. The "logs" are the new "emails," and they are being read by the very people OpenAI is trying to defeat.

The Mandate for Corporate Governance

For the C-suite, the takeaway is an immediate need for an audit of all data-sharing agreements and user terms of service. The court’s emphasis on the "uncontested ownership" of logs by the AI provider suggests that stricter user-side privacy controls might be the only way to prevent future disclosure. Companies can no longer assume that a protective order will keep their most sensitive user interaction data out of a competitor's hands.

The GC Discovery Checklist (2026 Strategy)

-

Inventory Sanctioned AI: Map all internal models and embedded SaaS AI tools.

-

Shadow AI Audit: Identify unapproved public chatbot usage to mitigate trade secret exposure.

-

Log Governance: Implement "Zero Data Retention" (ZDR) agreements where legally permissible.

-

Privilege Controls: Establish clear boundaries for when a prompt constitutes attorney-client privilege.

-

Forensic Readiness: Prepare for the possibility of surrendering de-identified logs in third-party suits.

The shift toward agentic liability means that every prompt and output is a potential piece of evidence in future regulatory inquiries. As Microsoft and other investors watch from the sidelines, the cost of compliance is rapidly escalating beyond mere legal fees. The 2026 legal climate is one of aggressive transparency, where the convenience of the developer is no longer a valid reason to keep the "black box" closed.

As we enter 2026, the legal landscape for AI has shifted from theoretical copyright questions to granular evidentiary battles in federal court. The S.D.N.Y. ruling on 20 million logs is the first major domino to fall in a broader transparency movement. It sets a high-water mark for what is considered proportional discovery in the age of massive large language model interaction datasets.

Companies that fail to adapt their data retention and de-identification strategies will find themselves on the wrong side of a sanctions motion. The "black box" has been opened, and the data inside will now tell the story of the next decade of intellectual property law. Every interaction is now a potential liability, and every log is a record of training provenance that can be held against the creator.

Strategic leaders must now view their chat logs as a liability asset that requires the same governance as financial records. The transition from "experimentation" to "litigation-ready" infrastructure is the primary challenge for the 2026 fiscal year. This ruling is merely the beginning of a long process of bringing artificial intelligence into the standard regulatory fold of the American judicial system.

People Also Ask

What is the significance of the 20 million ChatGPT log order?

The order forces production of a vast, de-identified dataset of user conversations, establishing that voluntarily submitted LLM chat data is discoverable in copyright litigation.

Can OpenAI protect user privacy during discovery?

Logs must be de-identified to strip personal information, but plaintiffs’ experts can still analyze the content to reconstruct infringement patterns.

Who is the judge presiding over the consolidated OpenAI copyright case?

District Judge Sidney H. Stein of the Southern District of New York is overseeing the consolidated proceedings.

Why were OpenAI’s privacy arguments rejected?

The court held that users voluntarily submitted their communications to ChatGPT, reducing their privacy expectations compared to unlawful interception cases.

Which news organizations are suing OpenAI?

Plaintiffs include The New York Times Company, Chicago Tribune, and additional regional publishers participating in the consolidated action.

Which law firms are representing OpenAI in the S.D.N.Y. case?

OpenAI’s defense includes Latham & Watkins, Keker Van Nest & Peters, and Morrison & Foerster.

Is the discovery order final?

Yes. Judge Stein affirmed the production order on January 5, 2026, declining to narrow or stay the scope.

How does this impact AI companies like Meta or Google?

The ruling warns the AI industry that courts will favor broad discovery access over developer-controlled search restrictions when IP infringement is alleged.

Legal Insight:👉 The Great Recalibration: Reversing PACCAR in UK Courts

OpenAI discovery ruling, ChatGPT copyright lawsuit, NYT vs OpenAI 2026, Judge Sidney H Stein, LLM data privacy law, Susman Godfrey OpenAI, Latham Watkins AI defense, forensic linguistics discovery